Banning Open-Weight Models Would be a Disaster

🌈 Abstract

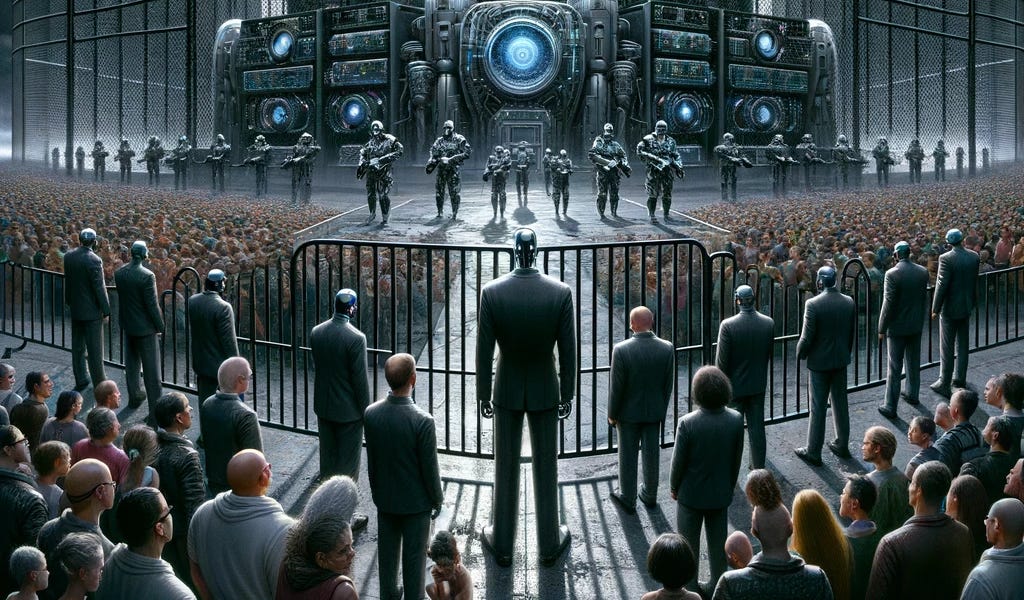

The article discusses the potential risks and benefits of open-weight AI models, and argues against banning access to these models. It highlights how open models can enable innovation, transparency, and resistance to authoritarian regimes, while closed models concentrate power and control. The article responds to a request for public comments by the U.S. Department of Commerce on regulating open-weight AI models.

🙋 Q&A

[01] How should "open" be defined?

1. How should NTIA define "open" or "widely available" when thinking about foundation models and model weights?

- A model is only "widely available" if its weights are available, as training requires access to large compute and data resources.

- Licensing restrictions can affect availability, but are hard to enforce and can be easily ignored by individuals and state actors.

- If anyone can download and run the model on their own hardware, the model should be considered "open."

1a. Is there evidence or historical examples suggesting that weights of models similar to currently-closed AI systems will, or will not, likely become widely available?

- Yes, there is historical precedent for proprietary software and technologies eventually becoming open source over time, as the technical gap between open and closed solutions shrinks.

- Examples include operating systems, cloud computing, social media, and chat applications.

1b. Is it possible to generally estimate the timeframe between the deployment of a closed model and the deployment of an open foundation model of similar performance?

- The gap is shrinking over time as proprietary knowledge gets distributed.

- Open models have caught up to GPT incredibly fast, and the delay is expected to continue decreasing.

- Releasing a model openly can give an AI creator a competitive advantage and help undercut more capable proprietary models.

1c. How should "wide availability" of model weights be defined?

- This question is not well-defined, as it's unclear how one would count the number of entities with access to closed model weights.

[02] What are the risks and benefits of open vs. closed models?

2. How do the risks compare between open and closed models?

- The biggest threats from AI come from corporations and state-level actors, who will have access to state-of-the-art AI regardless of regulations.

- Open models allow security researchers, academics, NGOs, and regulatory bodies to experiment with and build defenses against advanced AI.

- Open models level the playing field and enable more innovation and economic growth, while a ban would exacerbate inequality and make the U.S. less competitive.

3. What are the benefits of open models?

- Open models democratize access to AI, enabling use cases that are not financially viable with closed models.

- Open models allow academics and startups to build and distribute new applications.

- Open models can be easily integrated into existing workflows and applications.

- Open models can help foster growth among small businesses, academic institutions, and underfunded entrepreneurs.

- Open models have the potential to transform fields like medicine, pharmaceuticals, and scientific research.

- Open models can provide tools for individuals and civil society groups to resist authoritarian regimes, furthering democratic values.

4. What are the risks of open models?

- Open models may pose risks to security, equity, civil rights, or other harms due to misuse, lack of oversight, or lack of accountability.

- The lack of monitoring of open models may worsen challenges like the creation of synthetic non-consensual intimate images or enabling mass disinformation campaigns.

[03] How should the risks of open models be managed?

5. What are the technical issues involved in managing the risks of open models?

- The article does not provide specific technical details on managing the risks of open models.

6. What are the legal and business issues involved?

- The article argues that laws and regulations should punish people and organizations who abuse AI, rather than banning access to the technology entirely.

- A ban on open models might prevent some individual-level harm, but would expose society to existential threats by concentrating power and control.

7. What regulatory mechanisms can be leveraged to manage the risks of open models?

- The article does not provide specific recommendations on regulatory mechanisms, but suggests that a punitive approach targeting abusers is preferable to a restrictive approach banning access to the technology.

8. How can the strategy for managing open models be future-proofed?

- The article does not provide specific recommendations on future-proofing the strategy for managing open models.

[04] Other issues

9. Are there any other important issues related to open-weight AI models that the article discusses?

- The article argues that banning open models would be a massive impediment to innovation and economic growth, as open models democratize access to AI technology and enable use cases not viable with closed models.

- Open models allow for more transparency and enable broader access for oversight by technical experts, researchers, academics, and the security community.